Why Switch from Hadoop to Iceberg

Written by Javier Esteban · 14 June 2025

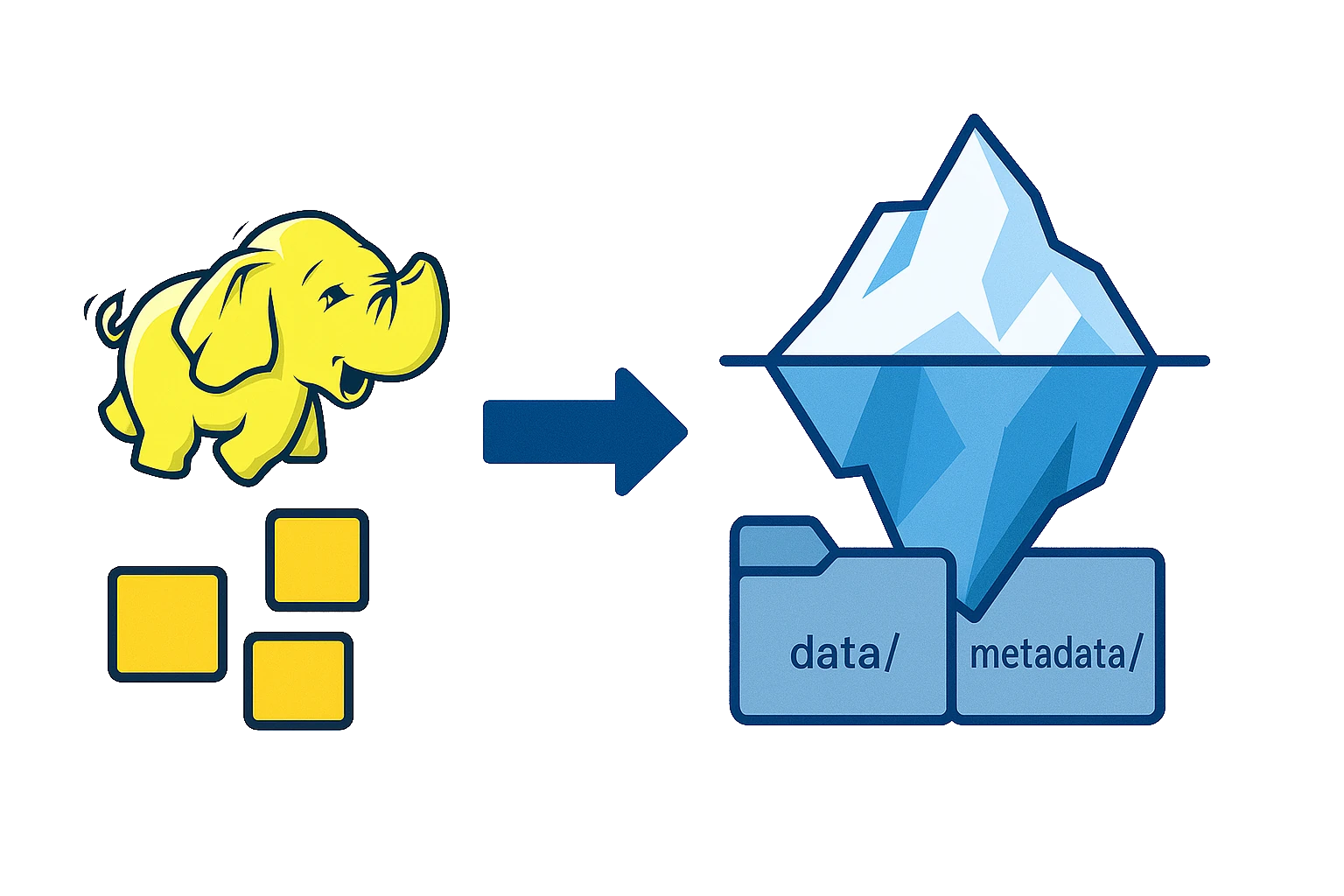

In this article we set out the reasons why Iceberg tables out-perform traditional Hadoop-style tables. We begin with a quick tour of how Iceberg works, then outline the advantages and finish with a side-by-side comparison.

How does Iceberg work?

An Iceberg table is made up of two main folders:

/my_table/

│

├── data/ ← ✅ Parquet (or ORC / Avro) files – the actual data

│

└── metadata/ ← ✅ All structured table metadata

- data/ holds the columnar files just as a Hive table would.

- metadata/ is what sets Iceberg apart. It contains:

| File / directory | Purpose |

|---|---|

| metadata.json | Control file: schema, partitions, properties and the snapshot ID currently in use |

| snapshots/ | One .avro file per write-operation, each describing the full state of the table at that moment (perfect for roll-backs) |

| manifest-list | A list of all manifests referenced by a snapshot |

| manifest | Avro index files listing the Parquet paths, row counts, column stats, etc., for a group of data files |

A write operation step-by-step

- Parquet files are written.

- New manifests are generated to describe those files.

- A new manifest list is produced, combining the new and existing manifests.

- A new snapshot is written, pointing at that manifest list.

- metadata.json is updated to reference the new snapshot.

How does this improve on Hadoop?

When a query engine such as Trino reads an Iceberg table it:

- Opens metadata.json to discover the current snapshot.

- Reads only that snapshot’s manifest list.

- Loads the manifests in that list.

- Touches only the Parquet files whose statistics show they contain rows relevant to the query.

If the query filters on WHERE year = 2023 and a manifest says its file contains only year = 2022, that file is skipped entirely. Hive, by contrast, must enumerate every folder and file before filtering, an expensive and slow operation. Iceberg’s centralised metadata brings instant file-pruning and adds snapshot roll-backs that Hadoop cannot offer.

Simplified visual summary

| Feature | Traditional Hadoop | Apache Iceberg |

|---|---|---|

| Metadata | External, sparse | Internal, detailed & versioned |

| Consistency | Not guaranteed | Snapshot atomicity & time-travel |

| Pruning | Limited | Column stats for fast pruning |

| Schema / Partition changes | Rigid, painful | Flexible evolution, no re-processing |

| Versioning | Absent | Snapshots & roll-back |

| Cloud-friendly | Limited | Designed for object storage |

Need training on Iceberg tables or help applying them to your data-lake? Get in touch and one of our experts will contact you.

Not quite ready for a consultation?

Drop us a message, and we'll respond with the information you're looking for.