What is dbt (data build tool) and how it works

Written by Javier Esteban · 27 July 2025

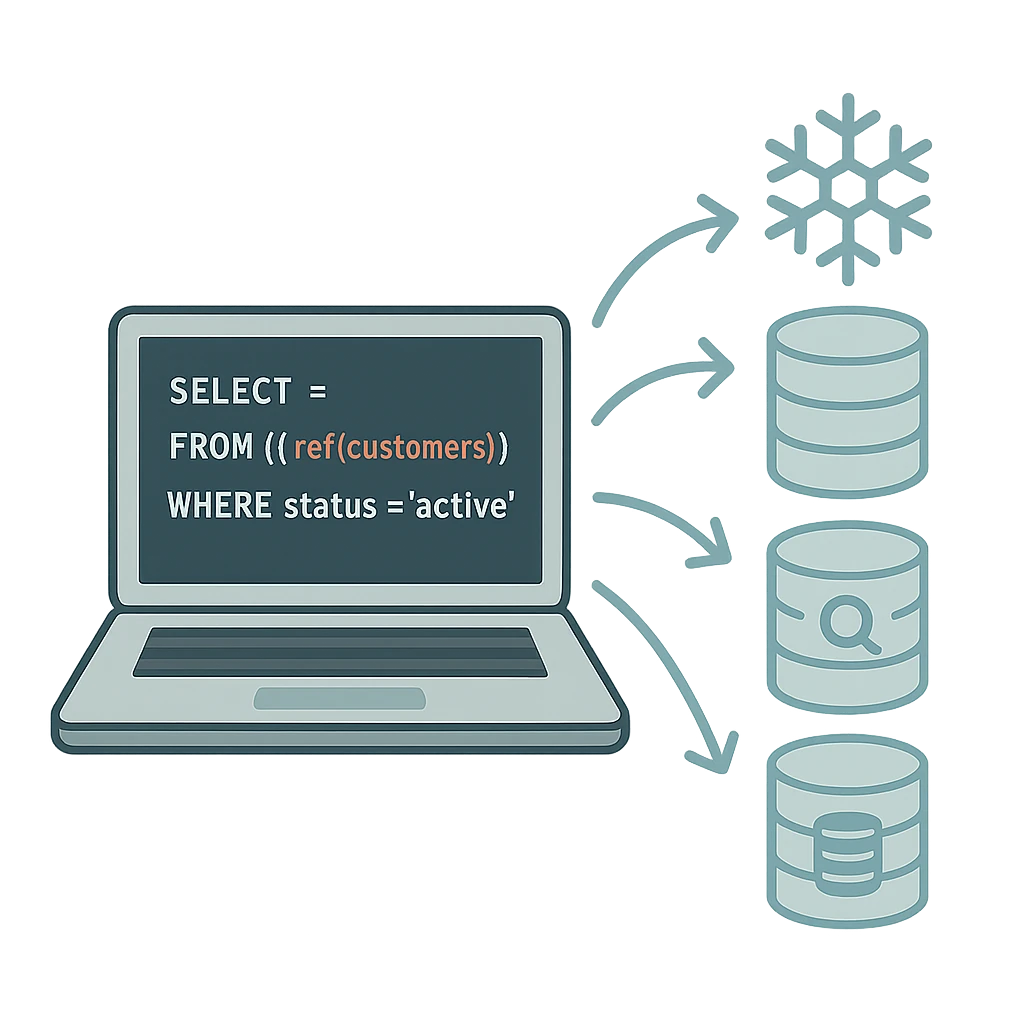

dbt (data build tool) lets data teams transform warehouse data with clean, version‑controlled SQL. It is a smart SQL compiler and scheduler: you write modular queries, and dbt tells the warehouse what to run and in which order.

How dbt Works

dbt uses Jinja2 (a Python templating engine) to turn .sql files containing dynamic directives ({{ }} and {% %}) into plain SQL.

Example template:

SELECT *

FROM {{ ref('customers') }}

WHERE status = 'active'

Compiles to:

SELECT *

FROM my_schema.customers

WHERE status = 'active'

dbt then sends the SQL to your warehouse or query engine (Snowflake, BigQuery, Redshift, Athena, Databricks, etc.). It doesn’t execute SQL itself—it orchestrates execution order, builds dynamic SQL, manages metadata, tests and docs.

Typical Project Layout

my_dbt_project/

│

├── dbt_project.yml # Main project config

│

├── models/ # SQL transformations

│ ├── staging/ # Near‑source staging models

│ ├── marts/ # Business or metric models

│ └── ... # Extra layers (intermediate, core, etc.)

│

├── macros/ # Re‑usable Jinja functions

├── seeds/ # CSVs loadable as tables

├── snapshots/ # Historical change tracking

├── tests/ # Custom or extra tests

└── target/ # Generated artefacts (compiled SQL, logs, docs)

| File / Folder | Purpose |

|---|---|

dbt_project.yml |

Structure, global vars, defaults, paths |

profiles.yml |

Warehouse connection (user, DB, schema, etc.) |

models/ |

SQL defining transformations |

macros/ |

Re‑usable SQL functions in Jinja |

seeds/ |

CSVs promoted to tables (catalogues, fixtures, tests) |

snapshots/ |

Historical data capture |

tests/ |

Custom SQL tests |

target/ |

Compiled SQL, logs, HTML docs, manifests |

Execution Flow

- Command – you run

dbt run(ordbt build,test,docs generate, etc.). - Config & Profiles – dbt reads

dbt_project.ymlandprofiles.yml. - Parse Models – it scans

models/, builds a dependency graph (DAG). - Template Render – Jinja replaces

{{ }}/{% %}expressions using:- Variables

- User macros (

/macros) - Native functions (

ref(),source(),config(), …)

- Order Determination – respects dependencies (A → B → C). Independent branches run in parallel.

- Execution – submits compiled SQL to the warehouse in the correct order.

Summary

dbt makes it simple to develop SQL‑centric pipelines:

- DAG‑aware dependencies, automated tests and rich documentation.

- Works with cloud‑native warehouses like Snowflake, BigQuery, Redshift, Athena, Databricks SQL.

- Integrates smoothly with Git, CI/CD and orchestrators such as Airflow or Prefect.

Need help levelling up your analytics engineering workflow? Chat with Crow Tech—dbt is our daily bread.

Not quite ready for a consultation?

Drop us a message, and we'll respond with the information you're looking for.