When to Use Apache Iceberg Tables

Written by Javier Esteban · 21 June 2025

In this article we focus on the situations in which your data-lake tables should be Apache Iceberg and the times when a different layout will serve you better.

Iceberg strengths

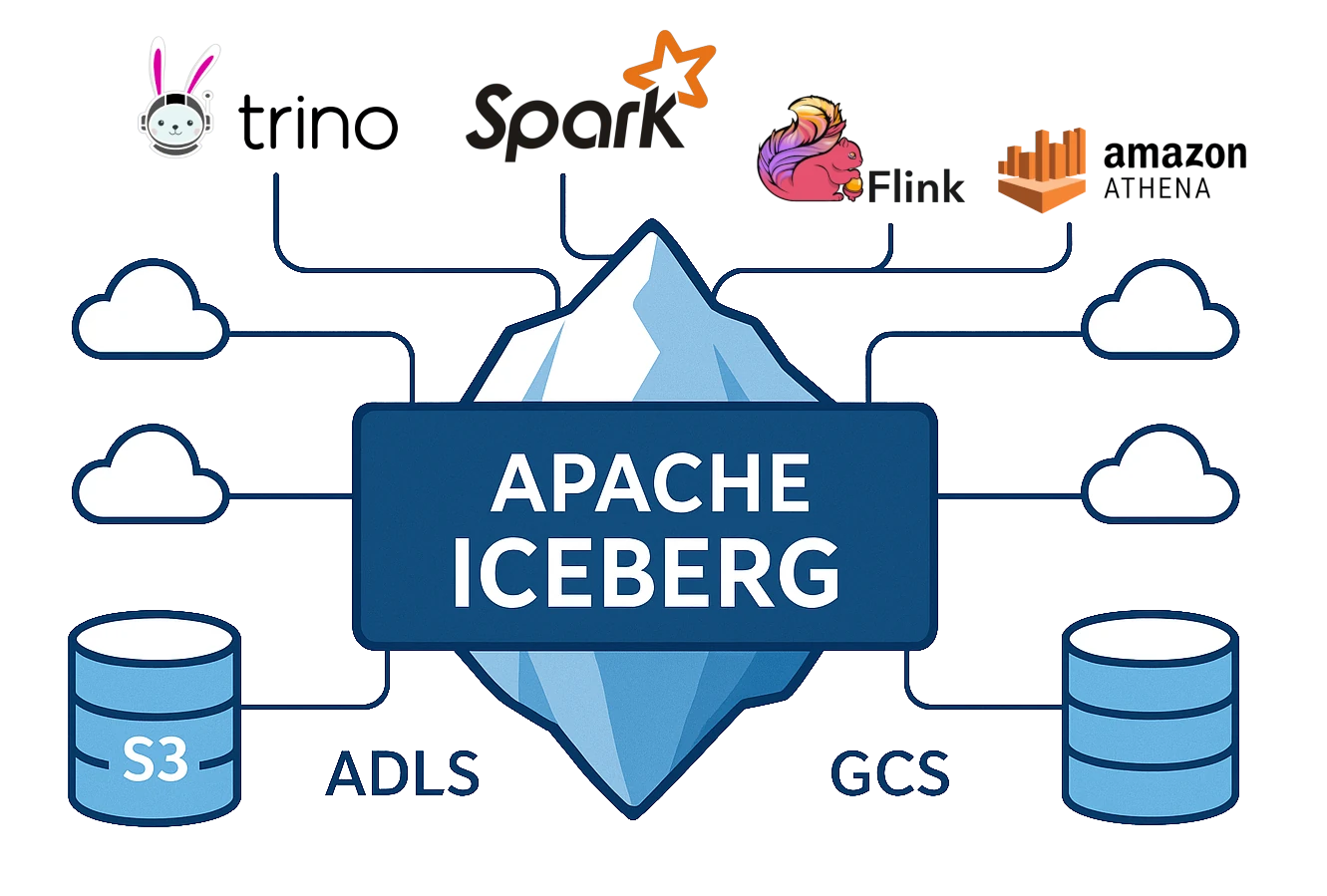

Iceberg was designed so that many engines can read the same transactional table. Trino, Spark, Athena and Dremio all speak Iceberg. As we explained in our previous article, its rich metadata lets you explore massive datasets efficiently. Another headline feature is versioning: you can roll a table back to any snapshot at will.

Because Iceberg never renames files it plays nicely with cloud object stores such as S3, ADLS and GCS, whether you run serverless or on your own clusters. In short, Iceberg is an excellent choice for small and very large datasets that must remain portable across clouds.

Limitations

Iceberg does not yet allow fully concurrent writes without external coordination. If two jobs write to the same table simultaneously they both read the same base snapshot, but only the first commit will succeed; the second will fail because its parent snapshot has changed.

It is also inefficient for extremely high-frequency or row-by-row writes: every commit creates new Parquet files, new manifests and a new snapshot. If your workload needs millisecond latency like a mobile-app back end, stick with an OLTP store such as MySQL or PostgreSQL.

Comparison with its competitors

Iceberg is the best all-round option when you need analytical scalability, strict consistency and engine independence.

- Delta Lake was built for Spark SQL from day one. Its transaction log lets you update a handful of rows many times per second—something Iceberg handles poorly. If your entire world runs on Spark and you need OLTP-style updates, choose Delta.

- Apache Hudi is optimised for continuous ingestion and works tightly with Flink and Spark. It scales CDC and real-time pipelines better, but its metadata is less detailed and its Merge-on-Read mode can slow queries, whereas Iceberg is tuned for fast, clean reads once data has landed.

Use-case guide

| Use-case | Iceberg? | Notes | |

|---|---|---|---|

| Daily or hourly batch loads | ✅ Yes | Each batch produces a neat snapshot. | |

| Micro-batches every 5-30 min | ✅ Yes (with compaction) | Tune compaction to avoid many tiny files. | |

| Streaming with < 10 s latency | ❌ Use Hudi or Delta | Snapshot overhead too high. | |

| Row-level event ingestion | ❌ Use OLTP or Kafka | Not designed for ultra-granular, high-rate writes. | |

| Concurrent writers | ⚠️ Needs coordination | Implement locking or queue commits. | |

| Heavy OLAP / BI queries | ✅ Yes | Excellent file pruning and statistics with Trino, Dremio, Spark. | |

| Frequent schema evolution | ✅ Yes | Rename, drop or change types without rewriting data. | |

| Time-travel audits | ✅ Yes | Snapshots let you query any historical state. | |

| Cold-storage lakehouse on S3/ADLS/GCS | ✅ Yes | No file renames, cloud-native. | |

| Multi-cloud portability | ✅ Yes | Self-contained metadata avoids lock-in. | |

| Pure Databricks ecosystem | ❌ Prefer Delta | Delta is natively integrated. | |

| Reproducible ML pipelines with versioned data | ✅ Yes | Snapshots and schema evolution support laboratory-grade reproducibility. | |

| Sub-second response for mobile apps | ❌ Use PostgreSQL/MySQL | Iceberg read latency is too high for real-time UI traffic. |

Still unsure which table format will unlock the most value from your data? Get in touch—our experts will be happy to help.

Not quite ready for a consultation?

Drop us a message, and we'll respond with the information you're looking for.